Computer system architecture is the basic plan for all computing devices. It shows how hardware and software work together. This ensures instructions are carried out well.

At its heart, computer architecture is the design that shapes a machine’s abilities and bounds. It covers all, from big ideas to the fine details.

This architecture is key. It affects how fast things work, how much power they use, and how well they perform. Experts use this knowledge to make devices better and more efficient.

Knowing these computing fundamentals is vital. It helps us understand how technology grows and changes. This knowledge is essential for all sorts of devices, from personal items to big servers.

Understanding What is Computer System Structure

Computer system structure is the basic way computers work. It lets them handle information and tasks. This setup makes sure all parts work together well.

The computer system structure is like a blueprint. It shows how hardware and software work together. Knowing this helps us see how computers do amazing things.

The Architectural Foundation of Computing

The foundation of computing is all about how systems handle data. It’s the base for designing computers that can do lots of things well.

Key parts of this computing foundation are:

- How components connect

- Systems for managing data flow

- How processing units are organised

- Design of memory hierarchy

These parts make a system where everything works together. The foundation makes sure all parts work well together, not alone.

Historical Development of Computer Architectures

The architectural history of computing is really interesting. It started with big ideas from thinkers. These ideas led to today’s digital systems.

Charles Babbage and Ada Lovelace talked about the analytical engine early on. Their ideas helped shape computer design.

Konrad Zuse came up with the stored-program concept in 1936. This idea changed how computers work by separating instructions from data.

John von Neumann’s 1945 paper was key to computer architecture. His work, along with Alan Turing’s, shaped today’s designs.

The term “architecture” in computer science started in 1959. Lyle R. Johnson and Frederick P. Brooks, Jr. at IBM made it official. This marked computer architecture as its own field.

| Year | Innovator | Contribution | Impact |

|---|---|---|---|

| 1837 | Charles Babbage | Analytical Engine Concept | First mechanical computer design |

| 1936 | Konrad Zuse | Stored-program Concept | Separation of instructions and data |

| 1945 | John von Neumann | Logical Element Organisation | Foundation for modern computer design |

| 1959 | IBM Researchers | Architecture Terminology | Formalised computer architecture discipline |

This history shows how old ideas shape today’s computers. These ideas are key in today’s computing world.

Today’s systems use ideas from the past. This shows why knowing history is important for computer design and new ideas.

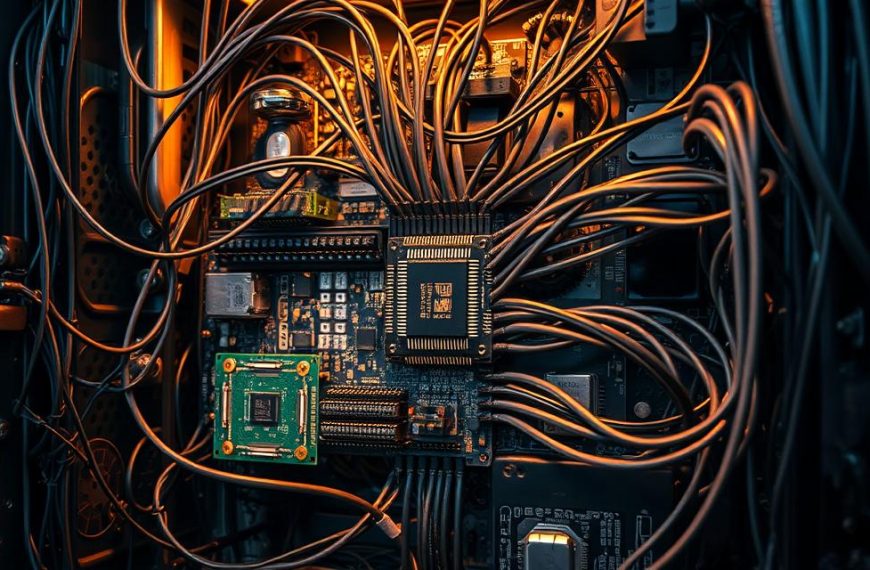

Essential Components of System Architecture

Exploring computer system architecture shows us three key parts that work together. These parts are the heart of every digital device, from phones to supercomputers.

Processor Architecture and Organisation

The central processing unit is the brain of any computer. Modern processor architecture combines power with energy saving. It uses clever designs.

Central Processing Unit Design Principles

Today’s CPU design aims to speed up instructions and cut down on delays. The CPU’s main cycle is fetch-decode-execute. Modern CPUs do billions of these cycles every second.

Advanced processors use a method called instruction pipelining. This lets them handle many instructions at once. It makes them faster without needing a higher clock speed.

Inside the CPU, different units handle different tasks. Arithmetic logic units do math, and floating-point units handle decimal numbers. The control unit makes sure everything runs smoothly.

“The elegance of modern processor design lies in its ability to execute complex instructions while maintaining architectural simplicity at the hardware level.”

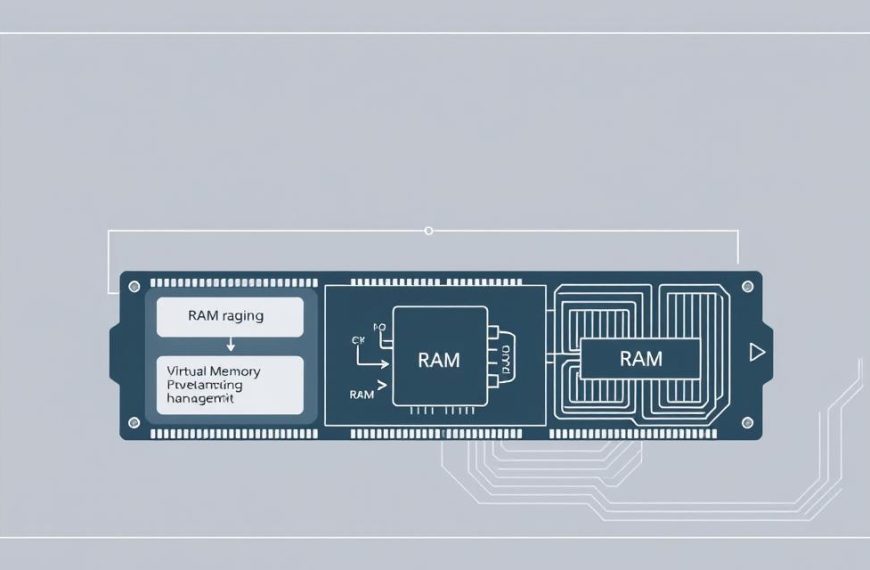

Memory System Architecture

The memory system is a layered storage system. It balances speed, size, and cost. This ensures fast access to data.

Registers are the fastest storage, right in the CPU. Cache memory is a fast buffer between the CPU and main memory. It stores data for quick access.

RAM is the computer’s main memory. It holds active programs and data. Secondary storage devices like solid-state drives and hard disk drives keep data safe for longer.

- Registers: Immediate processor access, minimal capacity

- Cache: High-speed temporary storage, moderate capacity

- RAM: Volatile working memory, substantial capacity

- Secondary storage: Non-volatile permanent storage, massive capacity

Input/Output Subsystem Design

The I/O subsystem connects the computer to the outside world. It handles data between peripherals and the main system.

System buses are like highways for data inside the computer. The data bus carries information, and the address bus finds memory locations. The control bus manages timing and signals.

Modern systems use standards like USB, PCI Express, and SATA. Each standard is best for different data transfer needs and devices.

Direct memory access (DMA) controllers let peripherals access memory without the CPU. This makes systems more efficient by freeing the CPU from data transfer tasks.

Fundamental Architectural Principles

Computing efficiency comes from key architectural principles. These rules guide how processors, memory, and input/output systems work together. They help us understand why computers are good at some tasks but not others.

Von Neumann Architecture Model

In 1945, John von Neumann introduced the stored-program concept. This idea is at the heart of today’s computers. It uses one memory for both instructions and data, with a central unit that follows commands one at a time.

The von Neumann model works by constantly fetching, decoding, and executing instructions. It gets instructions from memory, understands them, and then acts on them. This method has a bottleneck because it can only handle one instruction and one data stream at a time.

Yet, the von Neumann model is elegant because it treats instructions as data. This allows for modern programming and software development. It’s simple and helps us understand how computers work.

Instruction Set Architecture Concepts

The instruction set architecture is key between a computer’s hardware and software. It defines the machine code that processors understand. This layer lets programmers write code without knowing the hardware details.

Important parts of instruction set architecture include:

- Word size – how much data the processor handles at once

- Memory addressing modes – how instructions find memory locations

- Processor registers – fast storage in the CPU

- Data types – how different data is represented and handled

Different designs balance complexity and performance. Some have complex instructions for detailed work, while others are simple and fast. This choice affects how well a processor works and what it’s good for.

Parallel and Distributed Architecture Approaches

Parallel computing uses many processing elements to work together. This improves performance for certain tasks. It ranges from multi-core processors to huge supercomputing clusters.

Distributed systems go further by linking many computers together. They share resources across different machines, making systems more reliable and scalable. Cloud computing is a modern example of this.

Both parallel and distributed systems are complex. They need careful coordination and data management. The choice between them depends on what you need in terms of performance and complexity.

From von Neumann’s model to today’s parallel and distributed systems, architecture shapes computing. Each choice affects performance, complexity, cost, and specialisation needs.

Contemporary Architectural Implementations

Today’s computer architectures are designed for specific needs and tasks. They keep getting better, tackling the complex tasks of modern computing with new designs.

RISC and CISC Architectural Paradigms

The RISC architecture and CISC architecture are two main ways to design processors. RISC uses simple instructions for fast execution. CISC does complex tasks with one instruction.

Now, we see a mix of both in modern processors. These multicore processors can handle many tasks at once. This mix works well for everything from phones to supercomputers.

RISC is great for saving power, perfect for devices that need to last long on a charge. CISC is better for tasks that need complex calculations done in one go.

Specialised Processing Architectures

Specialised processors are made for specific tasks and do them very well. Graphics Processing Units (GPUs) are a big example of this.

GPUs are amazing at handling lots of tasks at once. They’re great for graphics, machine learning, and scientific work. Digital Signal Processors (DSPs) are also special, focusing on real-time signal processing.

Other processors include AI accelerators and those for cryptography. These are made for specific tasks and do them better than general-purpose processors.

Cloud and Enterprise System Architectures

Cloud computing spreads tasks over many computers, making it very scalable. This changes how we use and manage computing resources.

Enterprise systems need to be reliable and always available. They use extra parts and failover plans to keep working.

Modern enterprise systems use virtualisation and containerisation. These help use resources better and keep different tasks separate.

Cloud and enterprise systems also focus on keeping data safe. They use special hardware and software to protect data and meet rules.

Conclusion

Computer system architecture is like a blueprint for how machines work. It shows how data is processed, stored, and managed. This summary explains the roles of processors, memory, and I/O systems in making computers efficient.

Architectural principles, from old models to new ones, help design and improve systems. They are the base for how computers perform.

Nowadays, we see a move towards special processing for AI and quantum computing. These advancements could change how we solve problems and automate tasks. It’s important for tech experts to keep up with these changes.

Knowing about computer architecture helps make better choices in system selection and improvement. It balances speed, cost, and reliability in a changing tech world. This knowledge is key for facing future computing challenges and innovations.